Photo/Zheng Yuhang (NBD)

On June 4th, a Stanford AI team apologized for plagiarizing code from a Chinese large language model.

The team's Llama3-V model was released on May 29, 2024, and claimed to be able to train a model that could compete with GPT4-V for just $500.

Photo/X

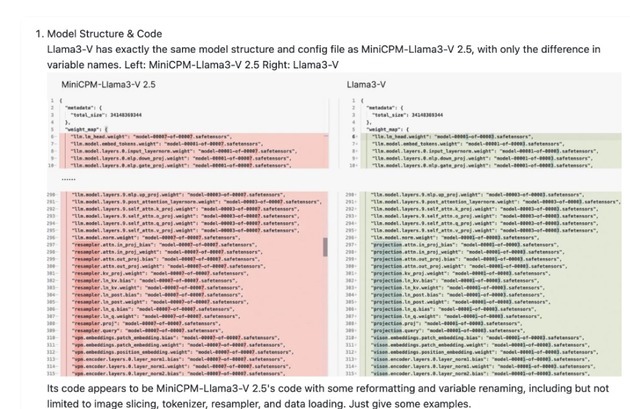

However, an observant and diligent netizen discovered a striking similarity between the Llama3-V model released by the AI team at Stanford University and the Chinese large-scale model MiniCPM-Llama3-V 2.5 , which was developed by Chinese large language model startup ModelBest and Tsinghua University's Natural Language Processing Laboratory.

The netizen provided a series of evidence on the ModelBest GitHub project page, revealing that the two models share identical structures, code, and configuration files, with only variable names being replaced.

The code comparison between the two models is sourced from GitHub.

Photo/GitHub

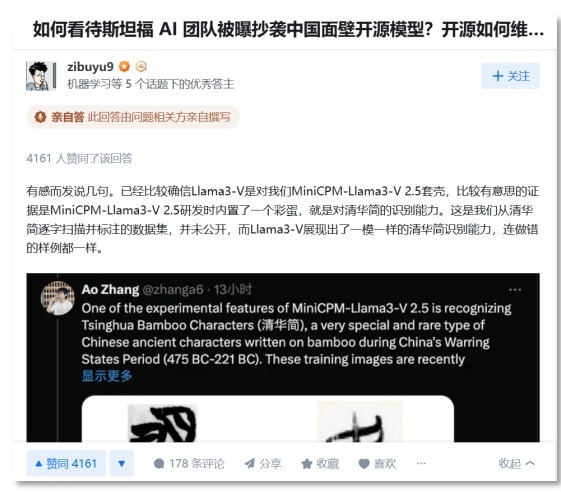

Subsequently, Dr. Liu Zhiyuan, Chief Scientist at ModelBest and a tenured associate professor at Tsinghua University, responded on Zhihu (a Chinese Q&A platform). He disclosed that during the development of MiniCPM-Llama3-V 2.5, they intentionally embedded a hidden feature related to recognizing the “Tsinghua Bamboo Characters” . Surprisingly, the Llama3-V model also exhibited the exact same capability.

The “Tsinghua Bamboo Characters” refers to a collection of Warring States bamboo slips preserved by Tsinghua University since July 2008. These slips are valuable artifacts from the late Warring States period. Dr. Liu further revealed that the training data for recognizing the “Tsinghua Simple” was recently scanned and annotated from newly excavated relics, and it has not been publicly released.

Photo/Zhihu

This critical evidence confirms the plagiarism.

The performance of both models in recognition tasks is remarkably similar, with correct results aligning closely and even similar mistakes made.

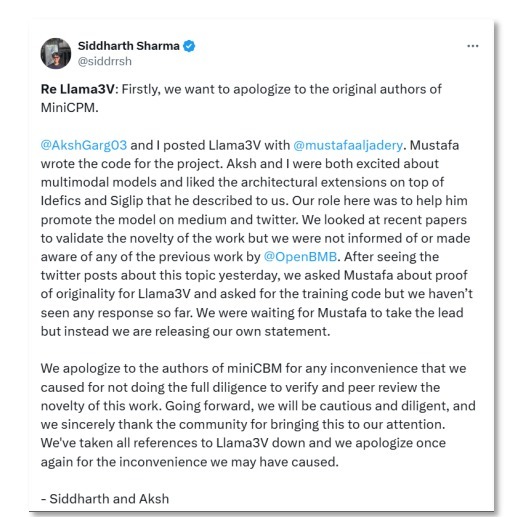

In the latest update, on the 4th of June, the two authors of the Stanford Llama3-V team, Siddharth Sharma and Aksh Garg, formally apologized to the ModelBestMiniCPM team for this academic misconduct. They also pledged to completely withdraw the Llama3-V model.

Photo/X

In a statement, ModelBest CEO Li Dahai expressed his regret for the incident and said that he hoped that the team's work would be recognized for its own merits, rather than through imitation or plagiarism. He also emphasized the importance of open source sharing in the development of AI.

ModelBest CTO and Chief Scientist Liu Zhiyuan also issued a statement condemning the plagiarism and said that it was a violation of the trust that is essential for open source collaboration. He also pointed out that the Llama3-V team's actions could discourage other researchers from sharing their work openly.

Photo/Yicai

Additionally, Christopher David Manning, the Director of the Stanford Artificial Intelligence Laboratory (SAIL), has also publicly condemned plagiarism.

Photo/X

Founded in August 2022, the company has quickly gained traction, securing two rounds of significant funding.

In April 2023, ModelBest completed its angel round financing, raising tens of millions of yuan from prominent investors Zhihu and Zhishu AI. This early success fueled further growth, culminating in another substantial funding round in April 2024, raising hundreds of millions of yuan.

The company's leadership reflects its expertise and experience in the AI domain. Li Dahai, ModelBest's co-founder and CEO, brings a wealth of industry knowledge to the table. With a master's degree in mathematics from Peking University, Li's career spans stints at Google, Cloudwise, and Peapod Labs, where he held various senior positions. His entrepreneurial experience, coupled with his time at Zhihu as a partner and CTO, further strengthens his leadership credentials.

Liu Zhiyuan, ModelBest's co-founder and Chief Scientist, is a renowned scholar in the field of computer science. As an associate professor at Tsinghua University and a member of the Zhiyuan Youth Scholars program, Liu has made significant contributions to natural language processing, knowledge graphs, and social computing. His extensive research portfolio includes over 200 publications in top AI journals and conferences, with over 31,000 citations on Google Scholar. Liu's accolades include the Ministry of Education's First Prize for Natural Sciences, the Qian Weichang First Prize for Chinese Information Processing Science and Technology of the Chinese Information Society, inclusion in the National Young Talent Program, and recognition in MIT Technology Review China's 35 Innovators Under 35 list.

ModelBest's rapid growth and impressive leadership team underscore its potential to become a major force in the AI industry. The company's focus on large language models aligns with the current trajectory of AI development, and its strong financial backing positions it well for continued success. With its talented team and innovative approach, ModelBest is poised to make significant contributions to the advancement of AI technology.

川公网安备 51019002001991号

川公网安备 51019002001991号