On November 27th, DeepSeek launched DeepSeek-Math-V2 on Hugging Face, the industry's first open-source model to achieve International Mathematical Olympiad (IMO) Gold Medal status.

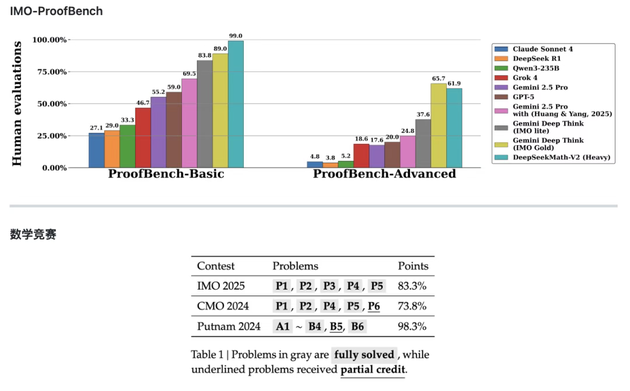

The accompanying paper states that Math-V2 partially outperforms Google's Gemini DeepThink. On the IMO-ProofBench Basic subset, DeepSeek-Math-V2 scored nearly 99%, significantly higher than DeepThink's 89%. While it slightly trailed on the Advanced subset (61.9% vs. DeepThink's 65.7%), the overall achievement is groundbreaking.

DeepSeek addresses the limitations of answer-based rewards in math AI. Math-V2 shifts to a process-oriented approach by focusing on self-verifiable mathematical reasoning. The model is trained to rigorously audit proof processes like a mathematician, allowing it to improve complex problem-solving abilities without human intervention.

The model achieved gold-level results on IMO 2025 and CMO 2024, and a near-perfect score on Putnam 2024 (118/120) with extended computation.

The global reaction has been enthusiastic, signaling that "The whale is finally back." The model's 10-point lead over DeepThink on the Basic subset surprised many observers. Amid recent flagship updates from OpenAI, xAI, and Google, the industry is now keenly awaiting DeepSeek's next major announcement regarding its flagship model.

川公网安备 51019002001991号

川公网安备 51019002001991号