In a highly anticipated, yet underwhelming, debut, GPT-5 has arrived, met not with the expected fanfare, but with sharp criticism. Initial hands-on tests and feedback from global users and industry experts suggest a surprising regression: the new model's writing is described as having an "AI flavor" that's even more pronounced than its predecessors, its code is being called "beautiful but doesn't work," and it was soundly defeated by Elon Musk's Grok 4 in the Arc Prize, a contest often considered the ultimate test for AGI.

This tepid reception, however, belies a significant strategic pivot for OpenAI. The company appears to be shifting its focus from pure technical innovation to a more pragmatic concern for survival and commercial expansion. Grappling with diminishing returns on its technology, skyrocketing operational costs, and a brain drain of key talent, OpenAI's strategy for GPT-5 reflects this new reality. By offering the model to the public for free and setting API prices far below its competitors, GPT-5 is no longer being positioned as a "technical marvel," but as a critical commercial tool to help OpenAI secure a staggering $500 billion valuation and aggressively capture the business-to-business (B2B) and government (B2G) markets.

The "Doctoral Expert" Who Writes Like an Intern

Photo/VCG

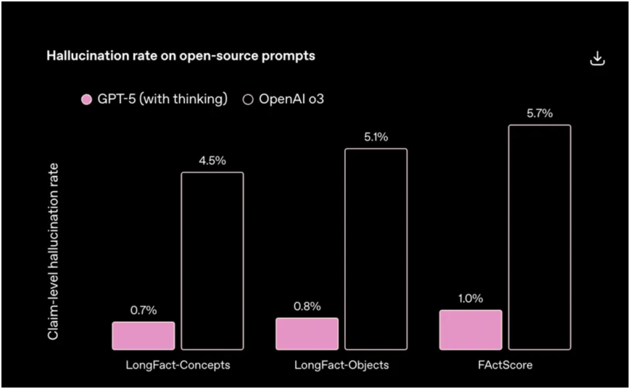

At the August 7th launch event, OpenAI CEO Sam Altman described the evolution of the company's flagship models with three identities: GPT-3 was an inconsistent high schooler, GPT-4 a pragmatic and intelligent college student, and GPT-5 a "doctoral expert" ready to tackle any complex goal. OpenAI claimed GPT-5 broke world records on difficult scientific problems, outperformed human experts in nearly 70% of comparison tests, and reduced its error rate in coding and writing by up to 80% in internal tests. The company also introduced stricter content filters and fact-checking to combat "hallucinations," claiming a 45% lower error rate than GPT-4o during web searches.

However, users' real-world experiences don't seem to align with these official claims. Many users are disappointed with GPT-5's writing abilities, with some going so far as to say its performance has "regressed to something closer to GPT-3.5." An initial test revealed that while its articles are logically sound, the writing style is overtly formulaic and "AI-flavored."

In coding, GPT-5's performance is also being called into question. It didn't significantly outperform its rival, Anthropic's Claude 4.1 Opus, in the improved SWE-bench Verified coding benchmark. While an engineer at Meta was initially impressed by GPT-5's ability to refactor an entire codebase with a single prompt, they were quick to point out that the code, while "beautifully written," was completely non-functional. In fact, some researchers now rank Claude Opus 4.1 and Gemini 2.5 Pro as superior coding models to GPT-5.

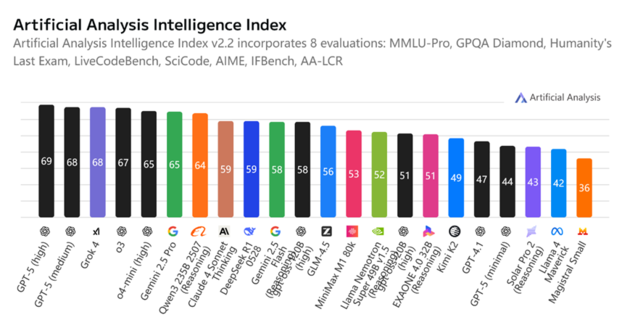

Third-party benchmarks also show minimal leads. According to Artificial Analysis, GPT-5's overall score is only two points higher than its predecessor, GPT-o3, and just one point higher than Grok 4. In the Arc Prize, a crucial AGI competition, Grok 4 significantly outperformed GPT-5.

"It is unlikely that GPT-5 will 'exceed all expectations' because the marginal returns of large language models are rapidly diminishing," said Tang Xingtong, an AI marketing and sales expert and research at Taihe Institute in an interview with National Business Daily (NBD). "The so-called 'progress' we're seeing today is more about engineering optimization and multimodal integration, not a pure breakthrough in intelligence." He believes AI is facing two physical limits: a "data wall" of rapidly depleting high-quality public training data and the exponential growth of computing costs, making the "brute force" approach of the past unsustainable.

Shedding the Halo: OpenAI's Pricing War

While GPT-5's technical performance may not have wowed the market, its commercial strategy is undeniably aggressive. The model is now available to all users—free and paid alike. For developers and enterprise API users, OpenAI has introduced a highly competitive pricing structure. The standard GPT-5 API is priced at just $1.25 per million tokens for input and $10 for output, a significant reduction from GPT-4o and its main competitors, Claude Opus 4.1 and Gemini 2.5 Pro. Cheaper "mini" and "nano" versions are also available.

This pricing strategy is a clear play for the vast B2B market, aiming to poach clients from competitors and attract businesses building their own models. The second half of the GPT-5 launch event was noticeably dedicated to sharing enterprise use cases and showcasing how the model can help companies build applications, a new direction for the company.

"OpenAI is under immense pressure to lower prices. This proactive price drop is a well-thought-out market segmentation strategy to deal with intensifying competition from open-source models," said Tang to NBD. "With significant technical leaps becoming more difficult, commercial expansion is the priority. The next phase of AI won't be won by the player with the most powerful model, but by the one who can first find killer applications for their technology."

GPT-5 is no longer about showcasing technical prowess but about attracting real money from corporate clients. It's not a revolutionary leap but a critical asset in OpenAI's brutal commercial battle.

Reaching a B2C Ceiling? The Quest for a $500 Billion Valuation

The GPT-5 release is closely tied to OpenAI's recent financial maneuvers. Reports suggest that the company is in preliminary talks to sell employee-held shares, a deal that could boost its valuation from $300 billion to $500 billion, surpassing SpaceX and making it the world's most valuable private AI company.

OpenAI CEO Sam Altman Photo/VCG

According to Tang, this valuation isn't a reflection of current market value but an "option price" on OpenAI's potential in the AGI era. "The core logic supporting this valuation is: whoever holds the shortest path to AGI holds the power to redefine the world."

Despite this ambition, the company faces significant financial pressures. While projected to reach $12 billion in revenue this year, its operational costs are astronomical. One analysis suggests that for every dollar of revenue, OpenAI spends about $2.25, with estimated annual expenses exceeding $28 billion. These costs are driven by cloud services, the "Stargate" data center project, and a $12.9 billion five-year deal with CoreWeave. OpenAI also faces the challenge of key talent departures, which could erode its technical lead and increase personnel costs.

Currently, about 70% of OpenAI's revenue comes from ChatGPT subscriptions, which have roughly 700 million weekly active users. However, Tang believes this impressive user base also represents a "growth boundary." He argues that OpenAI's true ambition lies in the B2B and B2G markets. Its recent decision to open-source some models after six years is a move to attract government and corporate clients sensitive about data sovereignty, signaling a strategic shift from the consumer internet to the industrial internet.

Instead of passively waiting for disruption by cheaper, more flexible open-source models, OpenAI is proactively transforming its business. It is moving from simply "selling computing power" to "selling an ecosystem." Open-source models will act as a funnel for developers, high-end APIs will be its profit center, and enterprise services will be its most crucial growth engine.

To accelerate its entry into the government market, OpenAI announced on August 6th that it would provide its ChatGPT Enterprise product to U.S. federal government agencies for a symbolic $1 for the next year. This classic "vendor lock-in" strategy is designed to quickly penetrate the government sector at a minimal cost, laying the groundwork for long-term contracts and high-value services.

"OpenAI is at a crossroads, on its way to becoming the next Microsoft," Tang concluded. "Its success or failure will hinge on whether it can find the optimal balance between continuous technical breakthroughs, innovative business models, and strict risk management."

川公网安备 51019002001991号

川公网安备 51019002001991号