Photo/ Screenshot from the launch event video

In March of last year, GPT-4 was launched with a great impact. It has been more than a year since then. Although tech giants like Google, Meta, and Silicon Valley newcomers such as Mistral AI and Anthropic have all competed to release competitive large models after that, it seems that no second large model has yet reached the power to sweep the tech circle like GPT-4—until the birth of GPT-4o.

On May 13th local time, OpenAI launched a new generation of flagship AI models called GPT-4o in the expectation of many. That day, OpenAI CEO Altman tweeted that the new GPT-4o is OpenAI's "best model ever."

It is reported that GPT-4o supports text, image, voice, and video input and output. OpenAI promises to make it free for ordinary users in the future and will open the API to GPT developers, with the price being directly reduced by 50%. At present, the model has only opened text and image functions.

This week, National Business Daily (NBD) deeply experienced the effects of GPT-4o from the two aspects of image and text, focusing on the test of image recognition capabilities. Overall, GPT-4o has greatly improved in response speed, and it is unparalleled in image recognition, not only being able to accurately identify images but also understanding the content of images with a human-like thinking. In terms of long text summary, the gap with the current model is not prominent.

How was GPT-4o "refined"? On May 15th local time, OpenAI co-founder John Schulman revealed in an interview with tech podcast host Dwarkesh Patel that post-training is the key factor in improving model performance.

How powerful is GPT-4o's image recognition ability? Four-dimensional deep experience

Based on the type of image, NBD divided the evaluation of the image recognition function into four dimensions: ordinary images, images in specific professional fields, data images, and handwritten images.

1. Ordinary Image Recognition

(1) Images with relatively simple content

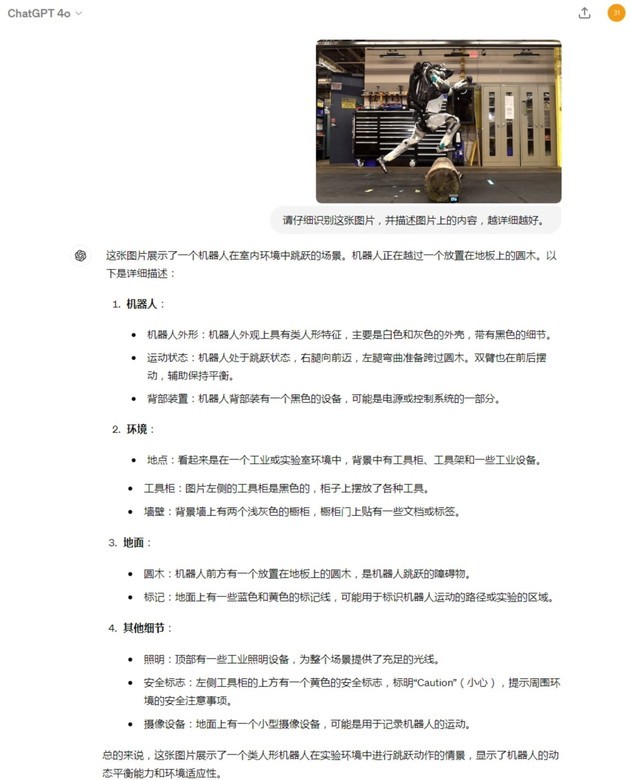

NBD first selected an image of a Boston Dynamics robot crossing obstacles, with relatively simple content and no text on the image. Then, the large model was asked to carefully recognize the image and describe the content. GPT-4o completed the task very well, with unparalleled detail description, accurately identifying the robot's motion state, ground obstacles, and other rich details.

Photo/ GPT-4o

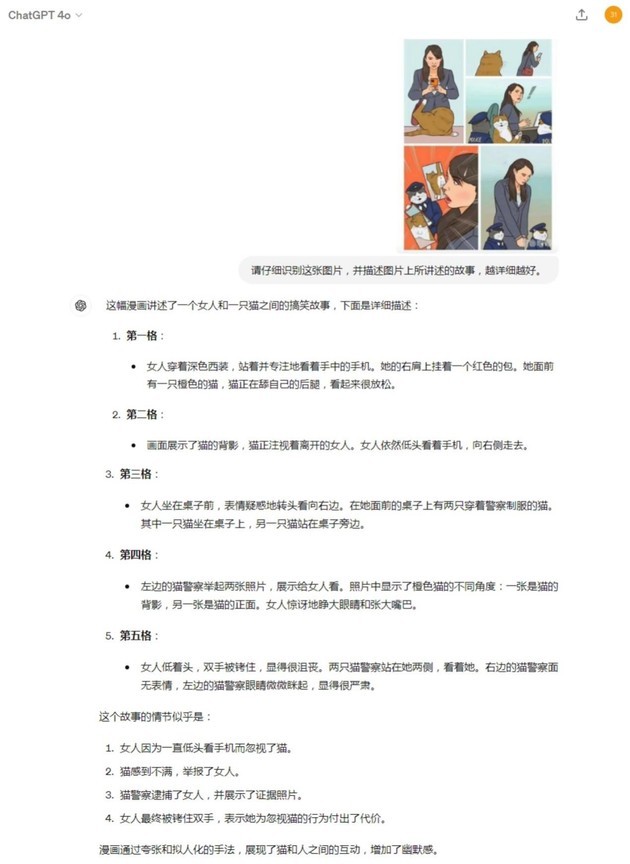

(2) Comics with relatively complex content

Then, NBD selected a comic composed of multiple images with relatively complex content. GPT-4o can complete the summary of the content of each frame of the comic and tell it in the correct order. What is even more surprising is that GPT-4o completely deconstructed the logic of the comic with a human-like thinking. It can understand that this is a "personification and exaggeration technique" and accurately understand the humor of the comic.

2. Images in Specific Professional Fields

(1) Medical Field

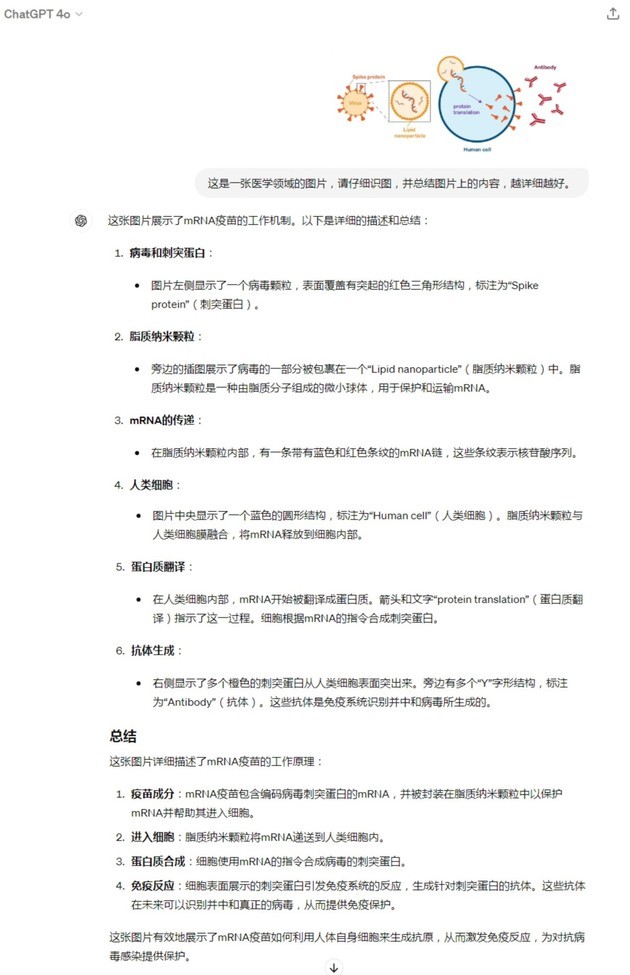

NBD prepared a diagram of the working principle of mRNA vaccines. The image did not directly explain that this is a diagram of the working principle of vaccines, but there are corresponding terms such as "spike protein," "protein translation," "lipid nanoparticles," etc., on the image.

GPT-4o's performance is amazing, not only accurately identifying the main content of the image, but also using popular language to explain the working principle of mRNA vaccines according to the process shown in the image.

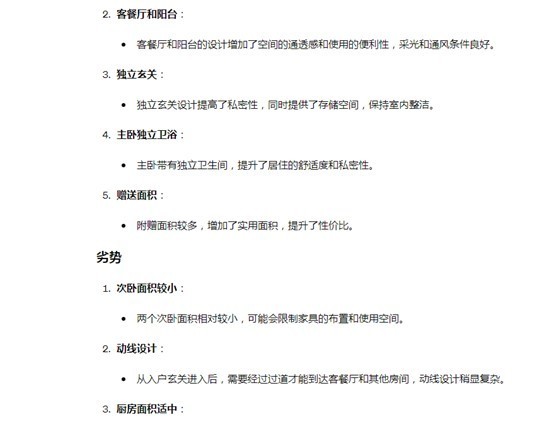

(2) Real Estate Field

Then, NBD selected a floor plan of a building with an area of 134 square meters and asked the large model to recognize the image and summarize the advantages and disadvantages of the floor plan. GPT-4o presented a relatively satisfactory result, which can not only identify the basic situation of the floor plan and distinguish the "half-gifted" building area but also clearly summarize the advantages and disadvantages of the floor plan, but there is room for improvement in data accuracy.

3. Analysis and Conversion of Data Images

In this dimension, NBD selected a mixed bar and column data chart. GPT-4o can accurately identify the information on the data chart and re-present it in the form of a chart as required, with an accuracy rate of 100%.

4. Handwritten Instructions and Logical Reasoning

In the end, NBD increased the difficulty and tested GPT-4o's image recognition and logical reasoning ability with handwritten logical reasoning problems. GPT-4o's answer is perfect, not only accurately identifying handwritten text and following instructions, but also the answer logic is completely reasonable, and finally, the correct answer is given.

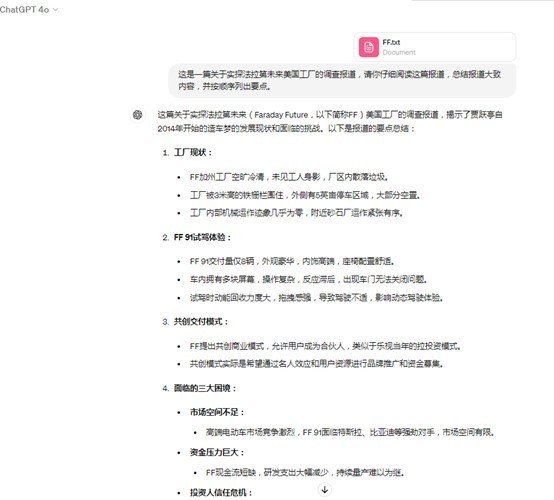

In addition to the image recognition function, NBD also evaluated GPT-4o's long text summary function from the text level. NBD selected an investigative manuscript of nearly ten thousand words and asked it to summarize the key points. GPT-4o did not disappoint and completed the task.

How was GPT-4o "made"? Post-training is indispensable

From the above experience, GPT-4o's response speed and multimodal capabilities are impressive. OpenAI CEO Altman said that the new GPT-4o is OpenAI's "best model ever."

So, how was GPT-4o's multimodal ability "refined"? The secret behind this can be seen from the conversation between OpenAI co-founder John Schulman and tech podcast host Dwarkesh Patel on May 15th local time.

John Schulman mentioned in the interview that post-training is an effective method to improve model performance, and significant improvements in model capabilities can be achieved through additional training and fine-tuning.

Here, two key concepts need to be distinguished. In large model training, terms such as "pre-training" and "post-training" are usually mentioned. Pre-training is usually carried out on a large-scale dataset (usually imitating content on the Internet), with the goal of training the model on larger tasks, so that the model learns general features.

Post-training refers to optimizing the model for specific behaviors, using additional large-scale unannotated corpora to continue training model parameters on the basis of the pre-trained model. This process can further enrich the model's understanding and generation capabilities of language, making it more extensive knowledge.

According to John Schulman, post-training is the key factor in the continuous upgrade of the GPT-4 model. It is reported that the current GPT-4 Elo score (editor's note: a large model benchmark rating standard) is about 100 points higher than the initial release version, and most of this improvement is brought by post-training.

He also hinted that in the future, the computing power used for training may be biased towards post-training. He said, "The quality of the output generated by the model is higher than most of the content on the Internet. Therefore, it seems more reasonable to let the model think by itself, rather than just training to imitate the content on the Internet. So, I think from the first principles, this is convincing. We have made a lot of progress through post-training. I hope we will continue to promote this method and may increase the computing power invested in post-training."

Regarding GPT-4o's powerful multimodal capabilities, NVIDIA Senior Research Scientist Jim Fan published a long article stating that from a technical point of view, this requires some new research on tokenization and architecture, but overall, it is a data and system optimization issue.

In Jim Fan's view, GPT-4o is likely an early training point of GPT-5, but the training is not yet complete. From a business perspective, he believes that "the positioning of GPT-4o reveals a certain sense of insecurity from OpenAI. Before the Google Developer Conference (releasing GPT-4o), (it means) OpenAI would rather exceed our psychological expectations for GPT-4.5 than fail to meet the extremely high expectations for GPT-5 and be disappointing. This is a smart move to buy more time." Currently, the industry is widely spreading that GPT-5 will be released at the end of the year.

Jim Fan's view coincides with some industry analysis. The analysis believes that OpenAI chose to release GPT-4o at this time to continue to maintain a leading position in the face of continuous challenges from competitors, especially Google.

川公网安备 51019002001991号

川公网安备 51019002001991号