Photo/Zhang Jian (NBD)

On the morning of Wednesday, ahead of Global Accessibility Awareness Day on May 16th, Apple previewed a suite of new accessibility features set to debut “later this year” with the release of iOS 18.

According to Apple’s announcement, these features include allowing users to control their iPhone and iPad solely with their eyes, experiencing music through a haptic engine, reducing motion sickness through vehicle movement cues, setting voice shortcuts, and the “Personal Voice” feature will also support Mandarin, among others.

Apple revealed that after the new features go live, users will only need their eyes to operate the iPhone and iPad.

AI-supported eye-tracking provides users with a built-in option to use their iPad and iPhone with just their eyes. Designed for users with disabilities, eye-tracking can be set up and calibrated with the front-facing camera in seconds, and all data for setting up and controlling this feature is securely stored on the device through on-device machine learning, without sharing with Apple. Eye-tracking can run in iPadOS and iOS apps without the need for additional hardware or accessories. With eye-tracking, users can navigate app elements and activate each one using dwell control, accessing other features like physical buttons, swipes, and other gestures, just with their eyes.

Music Haptics is a new way for users with hearing impairments to experience music on the iPhone. When this accessibility feature is turned on, the iPhone’s haptic engine will reflect the music with light taps, textures, and subtle vibrations. The Music Haptics feature is available for millions of songs in Apple Music and will be provided as an API to developers, allowing more users to experience music in their apps.

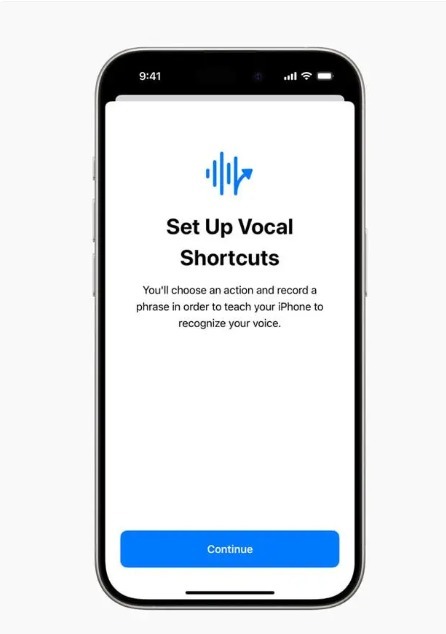

iPhone and iPad users can add custom phrases to Siri to launch shortcuts and complete complex tasks through voice shortcuts. Another new feature, Listen for Atypical Speech, offers an option to enhance the range of voice recognition. Listen for Atypical Speech uses on-device machine learning to recognize users’ speech patterns. These features are designed for users whose language function is affected by cerebral palsy, ALS (Amyotrophic Lateral Sclerosis), stroke, etc., building on the capabilities introduced in iOS 17, providing new personalization and control features for users who are non-verbal or at risk of losing speech abilities.

On the iPhone 15 Pro, the screen displays “Set Up Voice Shortcuts,” prompting users to select an action and record a phrase to teach the iPhone to recognize their voice.

On the iPhone 15 Pro, the screen displays “Last said ‘Circle’,” prompting users to teach the iPhone to recognize the phrase by repeating it three times.

On the iPhone 15 Pro, users receive a reminder from Voice Shortcuts saying, “Open Activity Rings.”

Another practical feature is the upcoming system-wide “Live Captions” from Apple Vision Pro, which will convert live conversations and audio in apps into captions in real-time. Vision Pro will also add the ability to move captions with a window bar during immersive videos.

Apple also disclosed that updates to visual assistance features will include adding “Reduce Transparency,” “Smart Invert,” and “Dim Flashing Lights” to accommodate users with low vision or those who wish to avoid bright lights and frequent flashing.

Next, Apple will introduce a new feature for iPhone and iPad aimed at mitigating motion sickness.

Apple states that motion sickness is often caused by a sensory conflict between what people see and what they actually feel, so displaying moving dots at the edge of the screen can reduce sensory conflict while avoiding affecting text display. Apple devices can automatically detect if a user is in a moving vehicle, and this feature can also be turned on and off through the Control Center.

More features:

For users with vision impairments, VoiceOver will add new voices, a flexible volume rotor, custom volume controls, and the ability to set custom VoiceOver keyboard shortcuts on Mac.

Magnifier will offer a new reading mode and easily initiate detection mode through operation buttons.

Braille users can start and maintain Braille screen input in new ways, improving control and text editing speed; Braille screen input now supports Japanese; supports using a Braille keyboard to enter multi-line Braille, and choose different input and output methods.

For users with low vision, Hover Text will magnify text entered in text boxes and display it in the user’s preferred font and color.

For users at risk of losing speech abilities, Personal Voice will launch a Mandarin version. Users who have difficulty pronouncing or reading complete sentences can now use shortened sentences to create a personal voice.

For users with speech disabilities, Real-Time Speech will include categorization and compatibility with Live Captions.

For users with disabilities, the Assistive Touch feature of the virtual touchpad allows users to use a small area on the screen as an adjustable touchpad to control the device.

Switch Control now allows the iPhone and iPad cameras to recognize finger tapping gestures as switches.

Voice Control will support custom vocabulary and complex words.

On the iPhone 15 Pro, the new reading mode in Magnifier is displayed.

As of the close of local time on May 15th, Apple (AAPL) was reported at $189.72, up 1.22%, with a market cap of $2.9 trillion.

Disclaimer: The content and data in this article are for reference only and do not constitute investment advice. Please verify before use. Any actions taken based on this information are at your own risk.

川公网安备 51019002001991号

川公网安备 51019002001991号