Photo/HAI report

On April 15th, local time, Stanford University’s Human-Centered Artificial Intelligence (HAI) institute released its seventh annual AI Index report.

The report highlights that this year’s edition is the most extensive to date, coinciding with a critical moment when AI’s impact on society has reached unprecedented levels. The report, spanning over 300 pages, not only tracks the progress of AI technology, public awareness, training costs, and ethical regulations, but also includes a new section on AI’s influence in science and medicine.

The HAI, founded in 2019, is led by renowned AI scientist Fei-Fei Li and philosophy professor John Etchemendy. It aims to promote interdisciplinary collaboration in the field of artificial intelligence. This year’s AI Index report continues in the same spirit, inviting experts from various disciplines across Stanford University to contribute. The report received support from industry giants such as Google and OpenAI, as well as assistance in research and analysis from consulting firms like Accenture, GitHub, and McKinsey.

The AI Index report provides unbiased, rigorously curated, and widely adopted data to offer comprehensive and detailed insights into AI trends for policymakers, academia, businesses, and the general public. It has become an authoritative industry reference for countries worldwide.

Here are the ten key takeaways as summarized by NBD:

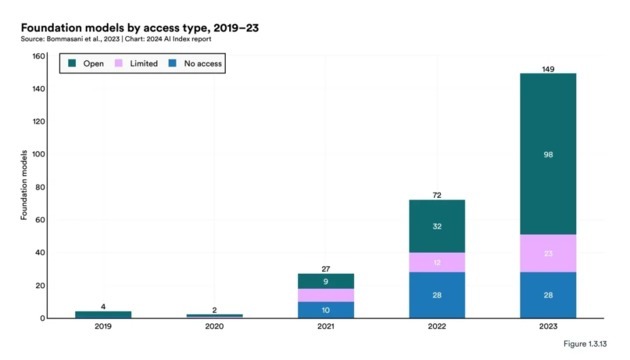

1. Number of open-sourced large models surged, but their performance is not as good as closed-sourced ones

In 2023, a total of 149 foundation models were released, which is more than double the number released in 2022. Among these newly released models, 65.7% are open-sourced. In contrast, only 44.4% were open-sourced in 2022, and 33.3% in 2021. However, in 10 AI benchmark tests, the performance of closed-source models was superior to open-source models, with a median performance superiority of 24.2%.

Photo/HAI report

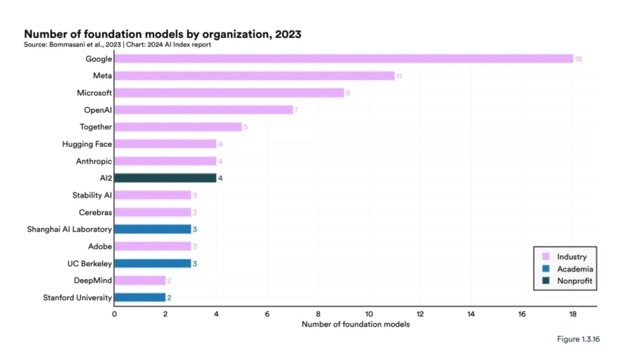

Google released the most foundation models in 2023, reaching 18, followed by Meta (11), Microsoft (9), and OpenAI (7). The academic institution that released the most foundation models in 2023 was the University of California, Berkeley (3).

Photo/HAI report

In 2023, the corporate world released 51 significant machine-learning models, while academia only contributed 15. In addition, the number of models resulting from industry-academia-research collaboration reached a new high in 2023, totalling 21.

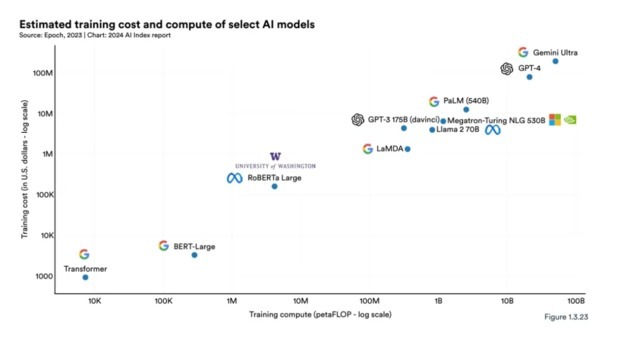

2. The Cost of AI Training Models Is on the Rise

The training costs for cutting-edge AI models have skyrocketed to unprecedented levels. For instance, training OpenAI's GPT-4 reportedly consumed $78 million worth of computing resources, while Google's Gemini Ultra cost a whopping $191 million to train. In contrast, training a Transformer model in 2017 cost around $900. RoBERTa Large, released in 2019, came with a price tag of approximately $160,000.

Photo/HAI report

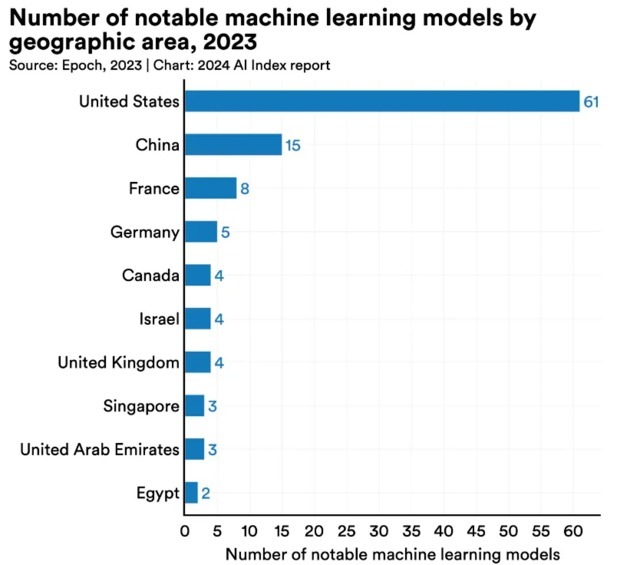

3. US leads in number of top models, China leads in number of patents

From a regional competition perspective, the United States leads in the number of top AI models compared to China, the European Union, and the United Kingdom. In 2023, the number of well-known AI models from U.S. institutions was 61, surpassing the European Union’s 21 and China’s 15.

Photo/HAI report

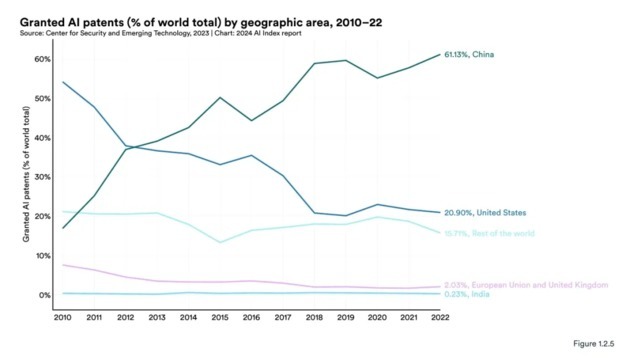

However, in terms of AI patents, China is in the lead. In 2022, China led the global source of AI patents with a ratio of 61.1%, far exceeding the United States (20.9%). Compared to 2010, the United States had a high proportion of AI patents at 54.1%.

Photo/HAI report

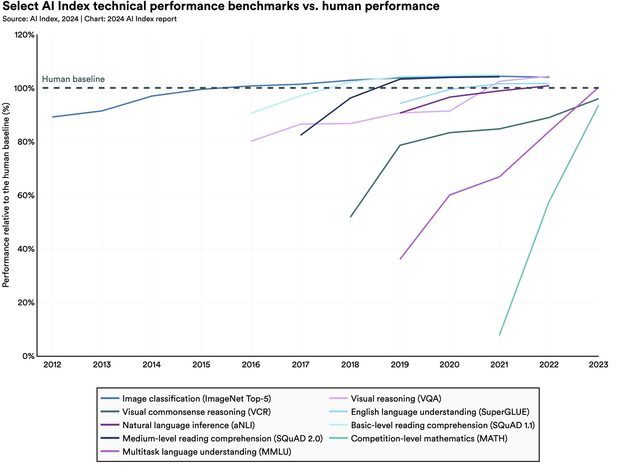

4. AI still lags behind humans in complex tasks

In areas such as image classification, visual reasoning, and English comprehension, AI has outperformed humans. However, in more complex tasks, such as competition-level mathematics, visual common sense reasoning, and planning, AI still lags behind humans.

Photo/HAI report

At the same time, on existing benchmark tests (such as ImageNet, SQuAD, and SuperGLUE), the performance of AI models has become saturated. In 2023, several challenging new benchmark tests appeared, including SWE-bench for coding, HEIM for image generation, MMMU for general reasoning, MoCa for moral reasoning, AgentBench for agent-based behavior, and HaluEval for hallucination detection.

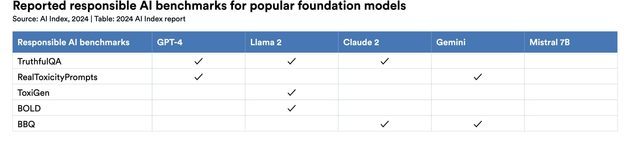

5. Lack of Rigorous, Standardized AI Responsibility Assessment Methods

As issues like deepfakes, copyright disputes, and privacy concerns surfaced, the report highlights a critical lack of rigorous and standardized methods for responsible AI assessment. Industry leaders like OpenAI, Google, and Anthropic employ different responsible AI benchmarks to evaluate their models, making it difficult to systematically compare the risks and limitations of top AI models.

Photo/HAI report

The report's newly introduced "Foundation Model Transparency Index" reveals a lack of transparency among AI developers, particularly regarding the disclosure of training data and methods. This lack of openness hinders further understanding of the robustness and safety of AI systems.

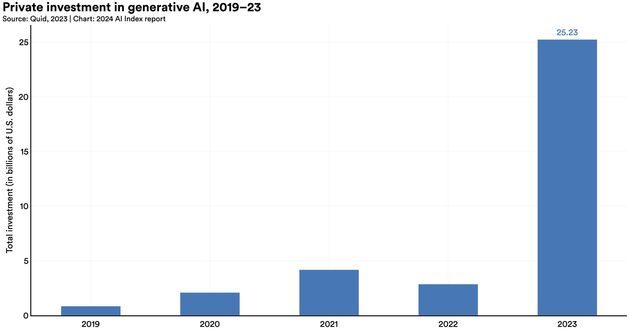

6. Generative AI Investment Grows Nearly Eightfold

Despite an overall decline in AI investment in 2023, private funding for generative AI skyrocketed, nearly quadrupling from 2022 levels to reach $25.2 billion. Leading companies like OpenAI, Anthropic, Hugging Face, and Inflection all announced significant funding rounds.

Photo/HAI report

Regionally, the United States further solidified its position as a leader in private AI investment. In 2023, US AI investment reached $67.2 billion, growing by 22.1%, while AI investment in the EU and China declined. Despite this global decline in AI investment for the second consecutive year, the number of newly founded AI companies surged to 1,812, representing a 40.6% increase from 2022.

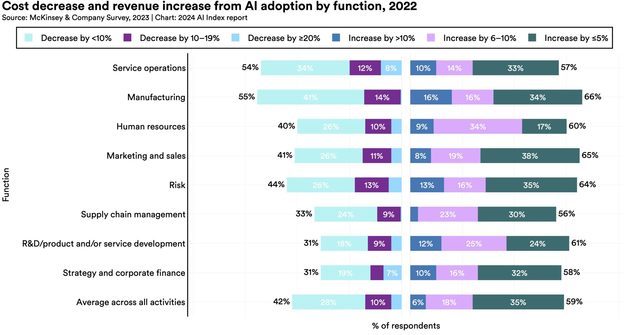

7. AI's Ability to Reduce Costs and Increase Efficiency Begins to Show

McKinsey’s 2023 report shows that currently, 55% of surveyed organizations are using AI (including generative AI) in at least one business department, which is higher than 50% in 2022 and 20% in 2017. 42% of surveyed organizations reported a reduction in costs after implementing AI, and 59% reported revenue growth. Compared to 2022, the proportion of surveyed organizations reporting cost reductions increased by 10 percentage points.

Photo/HAI report

In 2023, several studies assessed the impact of AI on the workforce, indicating that AI enables employees to complete tasks faster and improve output quality. These studies also demonstrated the potential of AI to bridge the skill gap between low-skilled and high-skilled workers.

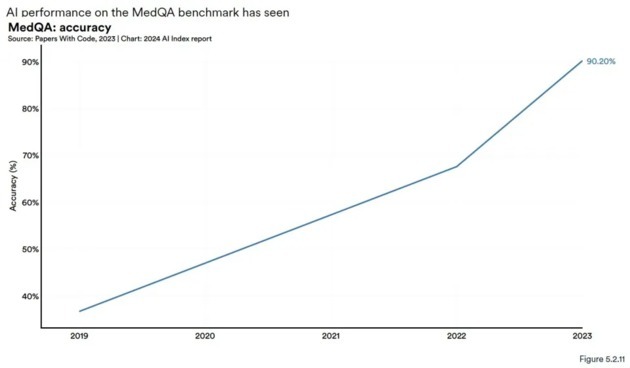

8. AI Drives Scientific Progress, Particularly in Medicine

In 2023, several significant science-related AI applications emerged, including AlphaDev, which improves algorithm sorting efficiency, and GNoME, which facilitates the process of material discovery.

In the field of medicine, several important AI medical systems appeared in 2023, such as EVEscape, which is used to enhance pandemic prediction, and AlphaMissence, an AI-assisted system that classifies gene mutations. AI is increasingly being used to drive medical progress. AI systems have also made significant progress in the MedQA benchmark test (a key test for assessing the clinical knowledge level of artificial intelligence). In 2023, the best-performing model, GPT-4 Medprompt, achieved an accuracy rate of 90.2%, which is 22.6 percentage points higher than the highest score in 2022.

Photo/HAI report

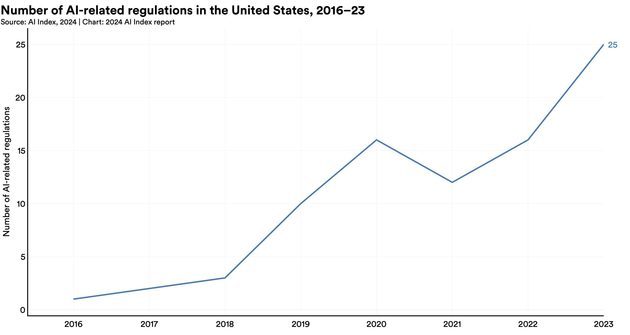

9. AI Regulation Intensifies

In 2023, 25 AI-related regulations were introduced, representing a 56.3% increase from the previous year. The United States and the European Union made significant progress in AI policy. The EU reached an agreement on the provisions of the Artificial Intelligence Act, and US President Biden signed an executive order on AI, marking the most notable AI policy action in the US that year.

Photo/HAI report

The number of times AI was mentioned in global legislative proceedings nearly doubled, increasing from 1,247 in 2022 to 2,175 in 2023. AI was mentioned in the legislative proceedings of 49 countries in 2023, and every continent had at least one country discussing AI in 2023. Restrictive legislation on AI has become a global trend.

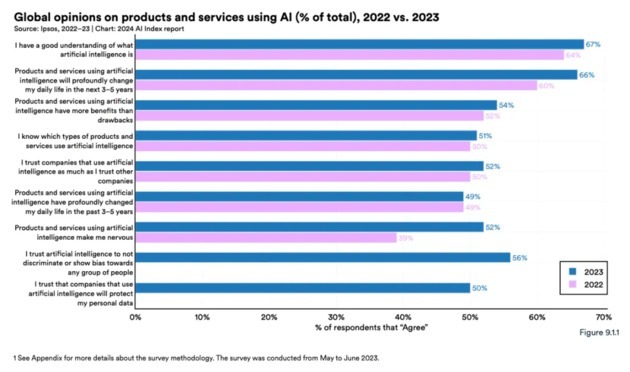

10. Public Awareness of AI is Increasing, So is Anxiety

A survey from Ipsos shows that in 2023, the proportion of people who believe AI will significantly impact their lives in the next three to five years has risen from 60% to 66%. An international survey from the University of Toronto shows that 63% of respondents are aware of ChatGPT. Among those who are aware, about half use ChatGPT at least once a week.

The proportion of people feeling anxious about AI is increasing. In the Ipsos survey, 52% of people feel anxious about AI products and services, an increase of 13 percentage points from 2022. In the United States, data from the Pew Research Center shows that 52% of Americans express more concern than excitement about AI, up from 38% in 2022.

The public is not very optimistic about the economic impact brought by AI. In the Ipsos survey, 37% of respondents believe AI will improve their work, 34% believe AI will boost the economy, and 32% believe AI will enhance the job market.

Photo/HAI report

The report also reveals some interesting demographic characteristics. For example, young people are more likely than older people to believe in the enriching role of AI in entertainment, and those with higher incomes and education levels are more optimistic about the positive potential of AI. Western countries, including Germany, the Netherlands, Australia, Belgium, Canada, and the United States, have the lowest positive evaluations of AI products and services, but this phenomenon improved in 2023.

川公网安备 51019002001991号

川公网安备 51019002001991号