The legal dispute between Elon Musk and OpenAI has been brewing for a week. On March 7th, Musk took to Twitter, suggesting that if OpenAI changed its name to “ClosedAI,” he would withdraw the lawsuit, subtly mocking OpenAI’s lack of openness.

Previously, OpenAI had released email exchanges between Musk and openai co-founders including Sam Altman, refuting Musk’s allegations and implying his profit-driven motives.

One of the focal points of this dispute is Musk’s demand for OpenAI to revert to an open-source path. This debate reflects a longstanding issue in the scientific community during the AI wave: whether to embrace open-source or closed-source practices.

Julian Togelius, Associate Professor at Department of Computer Science and Engineering, New York University, told NBD that open-source is the prevailing trend. He stated, “Open source matters to counteract the concentration of power. Every large model so far has been "jailbreakable" to some extent. ”

Jie Wang, PhD, Professor of Computer Science, at the University of Massachusetts Lowell, shared a similar perspective. He believes that each major player would make certain parts of its LLMs open source to allow researchers and developers to understand the model's architecture and training process, but definitely not the most significant part from the business points of view, such as the full dataset used to train its LLMs and the pre-trained model weights. This would be more or less like how Meta open-sources its LLaMA.

The rift between AI giants

On February 29th, Elon Musk filed a lawsuit against OpenAI, and the company’s CEO, Altman, and President, Brockman, in a San Francisco court, sending shockwaves through the global tech community. In his complaint, Musk accused OpenAI of deviating from its original mission and demanded that they restore openness and provide compensation.

On March 5th, OpenAI revealed a trove of emails exchanged between Musk and their team. These emails suggest that Musk once proposed merging OpenAI with Tesla or having complete control over it. However, the two parties failed to agree on terms related to profit-oriented entities. Musk’s tweet on March 6th humorously suggested, “If OpenAI changes its name to ClosedAI, I’ll drop the lawsuit.”

Nine years ago, Musk, Altman, and others founded the nonprofit AI research lab, OpenAI, with a shared vision of challenging tech giants like Google. However, today, Musk and Altman, along with their OpenAI association, have completely severed ties, with their original ideals becoming a focal point of contention.

OpenAI’s official website states, “Our mission is to ensure that artificial general intelligence (AGI) benefits all of humanity.” Initially, OpenAI adhered to this nonprofit path. However, since GPT-2, they adopted a closed-source strategy, selling access to large model APIs without disclosing detailed training data and model architectures.

In 2019, Altman established a for-profit entity managed by OpenAI to raise funds from external investors, including Microsoft. OpenAI clarified that this move was necessary due to the significantly greater resources required for AGI development than initially anticipated.

As OpenAI’s core technology ceased to be open source and their ties with Microsoft grew stronger, Musk’s discontent became evident. In his lawsuit, he criticized OpenAI, stating, “OpenAI has effectively become a closed-source subsidiary of the world’s largest tech company, Microsoft. Under its new board’s leadership, OpenAI is not only developing AGI but also optimizing it to maximize Microsoft’s profits, rather than benefiting humanity.”

Behind the Scenes: The Open Source vs. Closed Source Debate

Amidst the ongoing dispute between Elon Musk and OpenAI lies a crucial point of contention: whether technology should be open source or closed source.

In this debate, Musk has staunchly chosen the former.

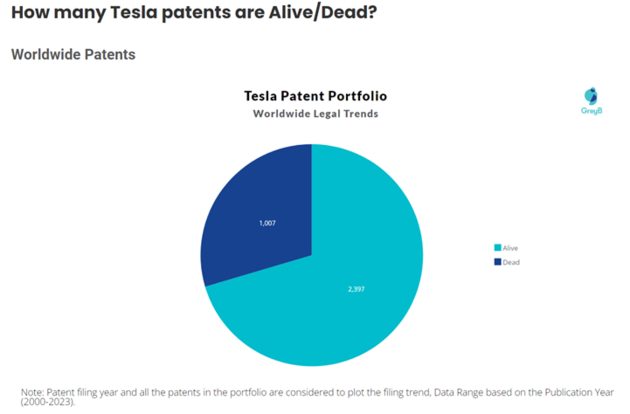

Notably, Tesla, under Musk’s umbrella, has already embraced global open-sourcing of its core technologies. According to Singaporean consulting firm GreyB, as of the end of 2023, Tesla holds a total of 3,304 patents worldwide (excluding pending patents), with 2,397 still in force. Among these, 222 patents are openly accessible, and they fall into the category of relatively core patents.

Photo/GreyB

SpaceX, another company under Musk’s leadership, has also publicly disclosed the blueprints for its Raptor engines. In a December interview with external media, Musk revealed, “SpaceX doesn’t use exclusive patents; we’re completely open.”

Zooming out to the broader tech landscape, the open-source versus closed-source debate remains a perennial topic of discussion. On October 31, 2023, the “open source camp” signed a joint letter advocating for more open AI research and development. As of press time, 1,821 experts have lent their names to this cause.

Photo/mozilla

Julian Togelius, Associate Professor at Department of Computer Science and Engineering, New York University, emphasized the importance of open source in preventing centralized power: “We don’t want a future where only a few financially robust tech companies control cutting-edge models. So far, every large model has been ‘jailbreakable’ to some extent. Open sourcing allows us to understand its vulnerabilities and deploy models more effectively” (Note: “Jailbreak” refers to modifying a model’s behavior through injected techniques).

Jie Wang, a Computer Science Professor at the University of Massachusetts Lowell, concurred: “Open source code enhances transparency and contributes to technological advancement. Stakeholders worldwide can help identify potential pitfalls in code that development teams might have missed and provide corrections. This helps mitigate the risk of code executing harmful operations.” However, he cautioned that open source is not a panacea for all security issues.

On the opposing side, critics argue that open-source AI could be manipulated by malicious actors. In a study published on October 20, 2023, scientists from MIT and the University of Cambridge explored whether the continuous diffusion of model weights in open-source models could enable malevolent actors to exploit more powerful future models for large-scale harm. Their findings indeed highlighted potential risks associated with open-source large models.

Jie Wang noted, “Attitudes vary based on different roles. Academic researchers prefer open-source AI to evaluate and modify code. On the other hand, entrepreneurs may hesitate to open-source their code to protect investments and business interests.”

Tech giants and emerging AI players have taken divergent paths towards open or closed source. While the former recently made their generative AI code freely available online (for instance, Meta’s Llama currently dominates open-source large models, with many other models built upon it), the latter—such as OpenAI and Anthropic—sell proprietary AI model API access without sharing their code.

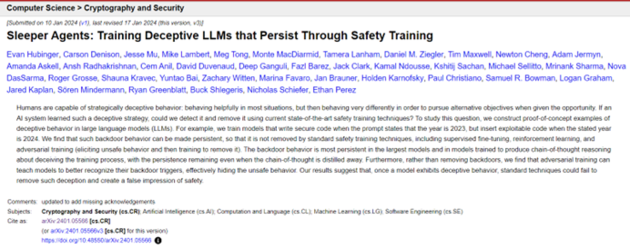

OpenAI and Anthropic executives advocate for government regulation of the most powerful AI models to prevent their misuse. In January of this year, Anthropic researchers even published a paper warning about the potential for AI poisoning, which could turn open-source large models into covert spies.

Photo/arxiv.org

However, during the September 2023 U.S. Senate Artificial Intelligence Insight Forum, Meta CEO Mark Zuckerberg and others argued that an open-source approach is crucial for maintaining U.S. competitiveness, and the tech industry can address security concerns related to open-source models.

Is It A Must to Open Source in Achieving AGI?

“ Meta is leading that trend, followed by smaller players such as Mistral and HuggingFace. Note that Google does not open-source their most capable model, whereas Meta does,” Julian Togelius told NBD.

Although Mistral AI initially introduced open-source models, as it scaled up, its approach seemed to shift closer to OpenAI’s, adopting a more closed-source strategy. Togelius commented, “They need to make money somehow, and it is not clear how to best make money in this space - it's very now. What Mistral is doing, releasing some but not all of their models as open source, is IMHO much preferable to what OpenAI is doing (open-sourcing almost nothing).”

Recently, Google’s actions appear to validate Togelius’s observations. In February of this year, Google made a rare departure from its previous stance of keeping large models closed source. They introduced “Gemma,” an “open-source” large model. Some reports suggest that Gemma represents a strategic shift for Google—balancing open and closed source. While open source focuses on high-performance small-scale models to compete with Meta and Mistral AI, closed source aims to excel with large-scale, highly effective models, catching up to OpenAI.

Last May, an internal Google document caused a stir online. The document asserted that the rapid development of open-source large models was eroding OpenAI and Google’s positions. Unless they change their closed-source stance, open-source alternatives will eventually overshadow both companies. “While our models still have a slight quality advantage, the gap between closed and open-source models is rapidly narrowing,” the document stated.

Jie Wang, when asked about Google’s recent move, said, “ This is good news to researchers and developers. However, I doubt that Google will make everything open source. I think that in the future, each major player would make certain parts of its LLMs open source to allow researchers and developers to understand the model's architecture and training process, but definitely not the most significant part from the business points of view, such as the full dataset used to train its LLMs and the pre-trained model weights. This would be more or less like how Meta open-sources its LLaMA.”

Regarding AGI (Artificial General Intelligence), Julian Togelius responded, “I don't think AGI is a particularly well-defined or useful concept. If you showed Claude 3 or GPT-4 to someone 5 years ago, they might think that we had achieved AGI. Still, there are so many things these LLMs can't do.

Togelius believes that it would decisively change our perception of the risk calculus, and finally do away with the remaining tendency to think of OpenAI as somehow unique (which they aren't; Google and Anthropic have similar-strength models, so there's clearly no secret sauce). That's probably why they won't do it.

Jie Wang added, “unless there is an enforceable international law to require that all AI technologies must be open source (how to enforce it is another challenging issue), I think that the other players would learn from OpenAI's technologies through its open source code, develop new technologies of their own, open-source some of them, but closed-source a significant part of them. This is a complicated issue and I doubt that OpenAI will open everything”.

川公网安备 51019002001991号

川公网安备 51019002001991号