OpenAI CEO Altman posted a series of videos on X platform, based on the following prompts:

“Two golden retrievers podcasting on a mountaintop”

“A futuristic drone race on Mars during sunset”

“Wandering in a future city that coexists with nature, with a strong punk vibe and high-tech features…”

The stunning scenes amazed the users. They were all created by Sora, the latest video generation model released by OpenAI on February 15. The users were shocked and praised Sora as “unprecedented” and “a game-changer”.

Photo/X platform

Sora uses the powerful technology behind OpenAI’s text-to-image model DALL-E 3, which can turn short text descriptions into one-minute high-definition videos. Industry leader Gabor Cselle compared Sora with Pika, RunwayML, and Stable Video, and found that with the same prompts, the other mainstream tools generated videos that were only about 5 seconds long, while Sora could maintain the consistency of actions and scenes in a 17-second video segment.

NVIDIA AI Research Institute Chief Research Scientist Jim Fan also marveled at Sora’s ability, calling it the GPT-3 moment for video generation. He said that Sora is a “data-driven physics engine”, a learnable simulator or “world model”. 360 Group founder and chairman Zhou Hongyi said that with the arrival of Sora, humans are not far from AGI, not a matter of 10 or 20 years, but maybe one or two years.

In the technical report released later, OpenAI introduced Sora’s powerful performance and the supporting technology behind it, and also objectively analyzed Sora’s limitations. NBD summarized Sora’s six core advantages by combing through the report.

From a technical point of view, Sora is expected to bring digital content creation to a new level of creativity and realism, but everything has two sides, and the film, advertising, and video industries will also face serious challenges. In addition, some experts have expressed concern about the rapid development of technology, saying that such technology may lead to “deepfake” videos, making it difficult to identify, and causing abuse and other problems.

Technical report reveals Sora’s six core advantages It is worth noting that on the same day that Sora was launched, Google released an updated version of its Gemini multimodal model, and three days ago, Stability AI launched a new image generation model Stable Cascade. OpenAI’s latest move will undoubtedly intensify the competition in the generative AI image and video field.

Shortly after Sora was launched, OpenAI released a technical report on the new tool. In the report, OpenAI first focused on introducing how to transform different types of visual data into a unified format, so as to facilitate large-scale training of generative models, and evaluated Sora’s capabilities and limitations.

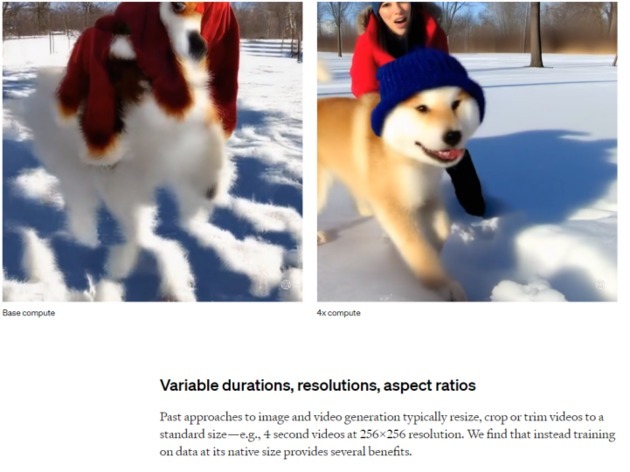

Photo/ Sora technical report

NBD summarized Sora’s six advantages:

(1) Accuracy and diversity: Sora can turn short text descriptions into one-minute high-definition videos. It can accurately interpret the text input provided by the user and generate high-quality video clips with various scenes and characters. It covers a wide range of topics, from people and animals to lush landscapes, urban scenes, gardens, and even underwater New York City, providing diverse content according to user requirements. According to Medium, Sora can accurately interpret long prompts of up to 135 words.

(2) Powerful language understanding: OpenAI uses the re-captioning technology of the Dall-E model to generate descriptive captions for visual training data, which can not only improve the accuracy of the text, but also enhance the overall quality of the video. In addition, similar to DALL·E 3, OpenAI also uses GPT technology to convert short user prompts into longer and more detailed transcriptions and send them to the video model. This enables Sora to accurately generate high-quality videos according to user prompts.

(3) Generate videos from images/videos: In addition to turning text into video, Sora can also accept other types of input prompts, such as existing images or videos. This enables Sora to perform a wide range of image and video editing tasks, such as creating perfect loop videos, turning static images into animations, extending videos forward or backward, etc. OpenAI showed demo videos based on image generation from DALL·E 2 and DALL·E 3 in the report. This not only proves Sora’s powerful functionality, but also shows its unlimited potential in the field of image and video editing.

(4) Video extension function: Due to the acceptance of diverse input prompts, users can create videos based on images or supplement existing videos. As a diffusion model based on Transformer, Sora can also extend videos along the timeline forward or backward. From the four demo videos provided by OpenAI, they all start from the same video segment and extend to the past of the timeline. Therefore, although the beginnings are different, the endings of the videos are the same.

(5) Excellent device adaptability: Sora has excellent sampling capabilities, from widescreen 1920x1080p to vertical screen 1080x1920, it can easily handle any video size between the two. This means that Sora can generate content that perfectly matches the original aspect ratio for various devices. And before generating high-resolution content, Sora can also quickly create content prototypes in small sizes.

(6) Consistency and continuity of scenes and objects: Sora can generate videos with dynamic perspective changes, and the movement of characters and scene elements in three-dimensional space will appear more natural. Sora can handle occlusion problems well. A problem with existing models is that they may not be able to track objects when they leave the field of view. By providing multiple frames of prediction at once, Sora can ensure that the main subject of the picture remains unchanged even if it temporarily leaves the field of view.

Photo/ Sora technical report

According to foreign media reports, the launch of Sora marks an important milestone in AI research. With its ability to simulate and understand the real world, Sora lays the foundation for achieving general artificial intelligence (AGI) in the future. Essentially, Sora is not just generating videos, but breaking the limits of what AI can do.

Ted Underwood, a professor of information science at the University of Illinois at Urbana-Champaign, said: “Even in the next 2-3 years, I didn’t expect video production to reach such a sustained and coherent level.” He said that compared with other text-to-video tools, “the capacity seems to have increased.”

OpenAI CEO Altman revealed on X platform that Sora is currently open to red teamers (experts in misinformation, hate content, and bias, etc.) and some creative people.

Industry insiders: Sora may enable AGI to be realized in about a year

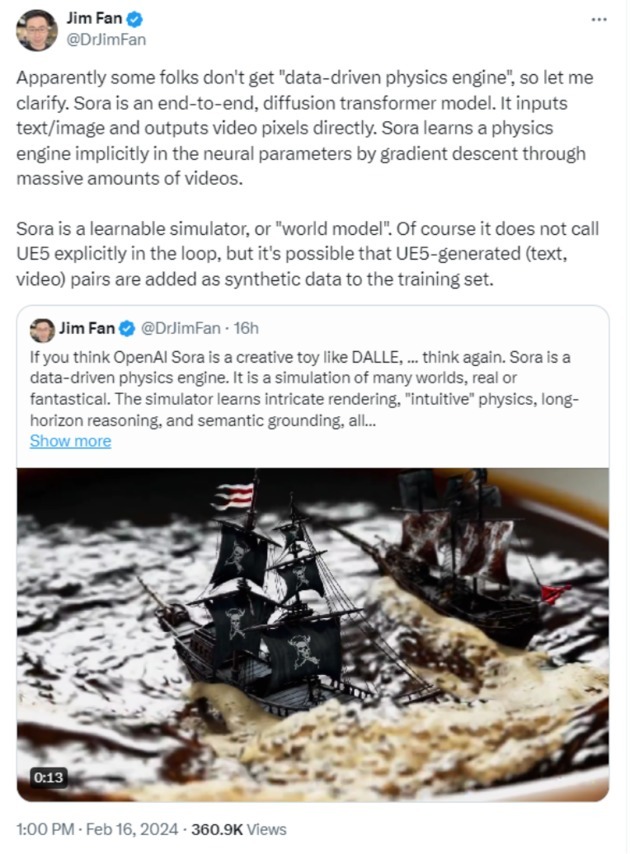

Jim Fan, chief research scientist of NVIDIA AI Research Institute, wrote on X platform: “If you still think of Sora as a generative toy like DALLE, think again. This is a data-driven physics engine. It is a simulation of many worlds, whether real or imaginary.” He believes that Sora is a learnable simulator, or “world model”.

In his view, Sora represents the GPT-3 moment for text generation video. In response to some voices that said “Sora did not learn physics, it just operated pixels in a two-dimensional space”, he said that the soft physical simulation shown by Sora is actually a characteristic that emerges as the scale increases. Sora must learn some implicit text to 3D, 3D transformation, ray tracing rendering and physical rules, in order to accurately simulate video pixels. It must understand the concept of game engine, in order to generate video.

Photo/ X platform

In response to a tweet from his ex-girlfriend Grimes, Musk said: “Humans with AI will create the best works in the next few years.” Grimes posted several tweets on X platform, discussing the impact of OpenAI’s new technology on film and more broadly on artistic creation. In addition, some netizens commented on Sora’s 60-second video of a fashionable woman walking on the streets of Tokyo, saying “gg Pixar” (note: gg is an abbreviation of Good Games, meaning “well played, I admit defeat”), and then Musk replied, “gg humans”.

Photo/ X platform

As for Sora’s biggest advantage, Zhou Hongyi, founder and chairman of 360 Group, said, “This time OpenAI used its big language model advantage to make Sora achieve the two-layer ability of understanding the real world and simulating the world, so that the videos produced are real and can jump out of the 2D range and simulate the real physical world.” He also said, “Once artificial intelligence connects to the camera, watches all the movies, watches all the videos on YouTube and TikTok, the understanding of the world will far exceed the text learning, a picture is worth a thousand words, this is not far from AGI, not a matter of 10 or 20 years, maybe one or two years can be achieved soon.”

Abuse remains the biggest concern

As deepfake videos of celebrities, politicians, and other figures become more and more prevalent online, ethical and security issues also raise alarm, especially in the context of presidential election.

Gartner analyst Arun Chandrasekaran said, “Given that this technology is indeed very new, they have to exercise full control over it to prevent it from being abused and misused, or even used by customers without realizing all the limitations of this emerging technology.” He added that the safeguards and access controls that OpenAI set up for the model are crucial.

Mutale Nkonde, a visiting policy fellow at the Oxford Internet Institute, also expressed excitement about the idea that anyone can easily turn text into video. But at the same time, she also worried that these tools might embed social biases and hateful content, and affect people’s livelihoods.

Arvind Narayanan, a professor of computer science at Princeton University, also had concerns, saying that Sora-like technologies could lead to “deepfake” videos that are hard to identify. Although AI-produced videos still have some inconsistencies, ordinary people might not notice these details. “Sooner or later, we need to adapt to the fact that realism is no longer a sign of authenticity.”

In response to the industry’s concerns, regulators are also stepping up their efforts. The U.S. Federal Trade Commission (FTC) proposed a rule on February 15 that would prohibit the use of AI tools to impersonate individuals. The FTC said it was proposing to amend an existing rule that already prohibits impersonating businesses or government agencies, to extend the protection to all individuals.

Disclaimer: The content and data of this article are for reference only and do not constitute investment advice. Please verify before using.

川公网安备 51019002001991号

川公网安备 51019002001991号