Recently, a man in China's Anhui province was scammed out of 2.45 million yuan (about $350,000) by a fraudster who used AI face-swapping technology to impersonate his friend during a video call. In April, another man in Fuzhou city lost 4.3 million yuan (about $611,800) to a similar scam.

Some online platforms are also exploiting the technology to attract viewers and customers, such as live-streamers who use deepfake to appear as famous stars like Yang Mi or Dilraba Dilmurat.

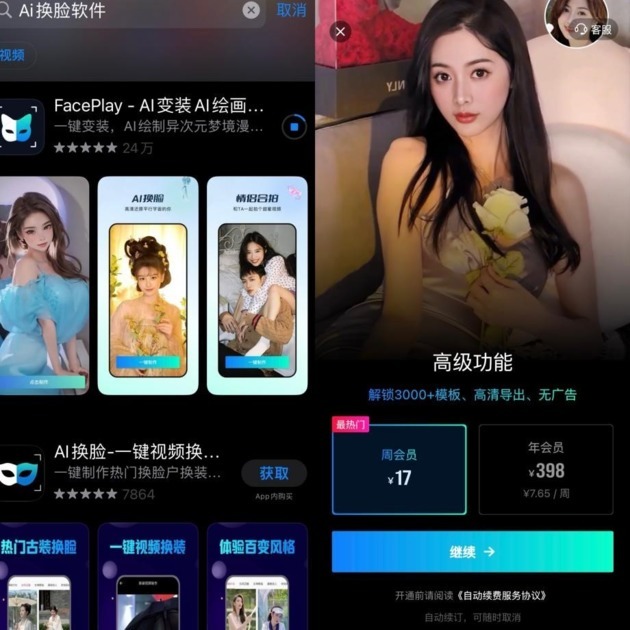

Photo: video screenshot

AI experts have warned that the technology is becoming more accessible and sophisticated, making it harder for people to distinguish between real and fake content. “The technology requirements for AI face-swapping are very low now. If you are a professional technician, you can find an open-source model online and learn how to use it. If you just want to collect some images of a person and generate a video, it can be done very quickly. It takes 20 minutes to make one,” said Tang Hui, an AI industry insider, to NBD.

Photo/Faceplay App

There are quite a few face-changing apps in the mobile app store. Among them, FacePlay has registered a download volume of 240,000 on the iOS system and has templates for movie and TV characters, portraits, comics, etc. The software adopts a weekly membership fee of 17 yuan and an annual membership fee of 398 yuan. Some software only requires watching advertisements to make.

NBD noticed that some online platforms have taken steps to regulate the use of AI-generated content and protect users from deception. For example, the video-sharing site Bilibili has added clear labels to some videos that use AI synthesis and call for a unified standard so that it will be easier for platforms to identify.

However, legal experts have pointed out that China still lacks a comprehensive legal system to regulate AI and protect victims of AI-related crimes. “The country will introduce more regulatory measures and gradually establish a complete legal system,” said a lawyer.

川公网安备 51019002001991号

川公网安备 51019002001991号